Last year the ICAEW hosted their first in-person event focused on analytics some fascinating talks centred around people-driven decision making. This year the event returned with an even bigger audience – a 200-ticket sellout!

Data is becoming the world’s largest trade and fundamental to running any business or government today...we need to collect, understand, and act upon it if we are to solve the climate and biodiversity crises which threaten to ingulf us”

Risk and governance were high on everyone’s minds. Bilal Alhmoud, a Privacy, Risk and Compliance SME at Microsoft, opened the day by outlining the key risks associated with AI which include data leakage (employees leaking sensitive data externally-inadvertently or intentionally!), oversharing (users having access to internal sensitive data that they shouldn’t) and non-compliance (use of AI apps to generate unethical or high-risk content). Bilal highlighted a Microsoft survey that found 97% of organizations are concerned about data leakage risks.

Later in the day, Nick Deveney from Eden Smith highlighted the risks associated with us getting hyper focused, and ultimately stuck, on getting ESG data right and accurate, which is preventing some organizations from starting to use ESG data at all. Nick suggests that we need to balance the risk of non-compliance with the organisational rewards we can get from capturing and analysing the data. It is always useful to look at the carrot rather than just the stick – and the benefits of integrating ESG considerations into our business include reduced operating costs (e.g. from being more energy efficient!), better talent acquisition, improved employee satisfaction, better shareholder returns and stronger community loyalty. By highlighting that many of the challenges faced in working with data in the ESG space are in fact identical to those in AI, Nick encouraged the audience to be more confident in working with ESG data.

This year’s conference included a welcome focus on solutions. Bilal introduced the AI Shared Responsibility Model, which divides the security burden of AI between AI platforms (like Microsoft) organizations, and individual users. David Heath, CEO of Circit, outlined how their solution provides full assurance over the completeness of bank statement data which serves as the foundation of verifying cash balances, the General Ledger and financial statements that are based on it. And Jacinta Linehan from Origin Enterprises shared how they used 5Y Technologies to build a Microsoft-based ESG automated reporting suite that automated all the stages of data collection, data processing/storage and reporting. Hearing these real-life examples of how organizations are collecting and using data is one of the most valuable aspects of the event, as reflected in one of the most commonly asked questions from the audience: ‘can you give me an example of that in real life?’

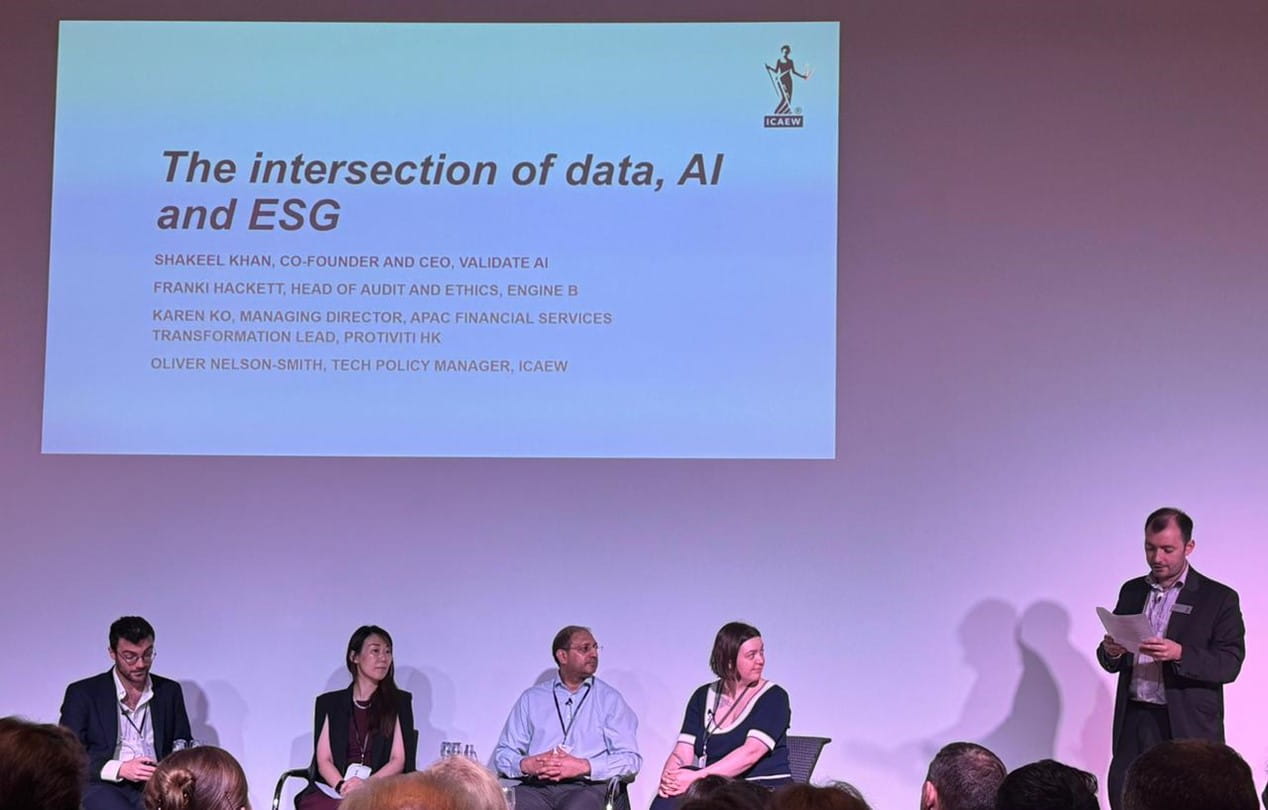

The day’s more reflective discussions focussed on trust and trustworthiness, and it was clear from the opening segment – where ICAEW’s Head of Data Analytics and Tech, Ian Pay, used a deepfake version of himself to introduce the day – that this is coming ever more challenging. In her captivating talk, Monica Odysseos talked through a very useful structure for how we should go about designing and building AI solutions for our organisations in a way which is well-controlled and reliable. Shakeel Khan, Co-Founder and CEO of Validate AI, explained the three tenets of trustworthiness in AI: relevance (does the AI address the correct problem?), ethical and legal compliance (is it aligned with our values?), and technical robustness (is the AI developed correctly and without hidden biases?). Shakeel shared the lessons learned from Validate AI’s roadshow across industries, highlighting how sectors like banking, healthcare, and education are beginning to apply assurance practices to their AI models, with growing regulatory pressure on businesses to ensure their AI systems are safe and reliable.

Ian Pay, head of the ICAEW Data Analytics community, noted that while there is an intention to leverage AI and analytics in the sector, very few organisations have AI solutions in production. It was also clear that only a few were fully engaged with ESG: as Malcom noted, the challenges of ESG are greater than just those which we can easily put numbers on (although as accountants of course we are more comfortable with numbers!).

In both arenas, Malcom highlighted that data (like oil with the widespread analogy!) is “slippery”, and to fully unlock the potential of AI, businesses must first lay a solid foundation of data governance and ensure they are prepared for the ethical challenges that come with advanced technologies. AI assurance is essential for maintaining trust in AI systems, and organizations must adopt comprehensive frameworks to ensure their AI deployments are safe, ethical, and compliant. The same is true of ESG.

On the whole, the day prompted a lot of reflection, and gave an opportunity to catch up with colleagues across the profession who share our interest in data. Malcom’s closing remarks have also stuck with us when he stressed that all this data, and the financial reports that come from it, are only the means to an end. There is no point if we do not act on what data shows. And that is particularly important with ESG: the figures, and the reporting, are not important in and of themselves – we need to use that information to modify how we behave and how we consume the resources of the planet. That, not the reporting, is what is important.

We’ll be exploring more of the topics in detail over the coming weeks, and you can read a recap of last year’s event which was centred around people-driven decision making.